Code by Thai-Hoang Pham at Alt Inc.

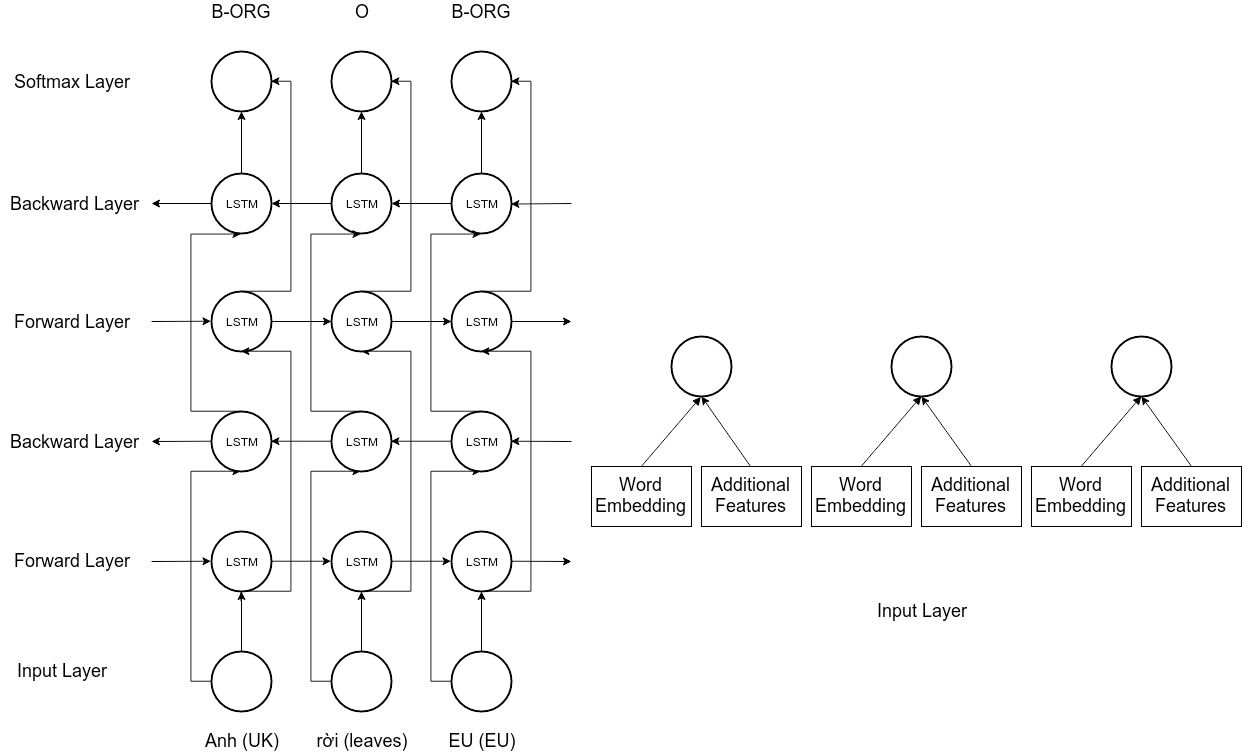

vie-ner-lstm is a fast-implementation of the system described in a paper The Importance of Automatic Syntactic Features in Vietnamese Named Entity Recognition. This system is used to recognize named entities in Vietnamese texts and written by Python 2.7. The architecture of this system is two bidirectional LSTM layers followed by a feed-forward neural network. Finally, the output sequence is predicted by a softmax function.

Our system achieved an F1 score of 92.05% on VLSP standard testset. The performance of our system with each feature set is described in the following table.

| Word2vec | POS | Chunk | Regex | F1 |

|---|---|---|---|---|

| 62.87% | ||||

| x | 74.02% | |||

| x | x | 85.90% | ||

| x | x | 86.79% | ||

| x | x | 74.13% | ||

| x | x | x | x | 92.05% |

This software depends on NumPy, Keras. You must have them installed before using vie-ner-lstm.

The simple way to install them is using pip:

# pip install -U numpy kerasThe input data's format of vie-ner-lstm follows VLSP 2016 campaign format. There are four columns in this dataset including of word, pos, chunk, and named entity. For details, see sample data in 'data' directory. The table below describes an example Vietnamese sentence in VLSP dataset.

| Word | POS | Chunk | NER |

|---|---|---|---|

| Từ | E | B-PP | O |

| Singapore | NNP | B-NP | B-LOC |

| , | CH | O | O |

| chỉ | R | O | O |

| khoảng | N | B-NP | O |

| vài | L | B-NP | O |

| chục | M | B-NP | O |

| phút | Nu | B-NP | O |

| ngồi | V | B-VP | O |

| phà | N | B-NP | O |

| là | V | B-VP | O |

| dến | V | B-VP | O |

| được | R | O | O |

| Batam | NNP | B-NP | B-LOC |

| . | CH | O | O |

To access the full dataset of VLSP 2016 campaign, you need to sign the user agreement of the VLSP consortium.

You can use vie-ner-lstm software by a following command:

$ bash ner.shArguments in ner.sh script:

word_dir: path for word dictionaryvector_dir: path for vector dictionarytrain_dir: path for training datadev_dir: path for development datatest_dir: path for testing datanum_lstm_layer: number of LSTM layers used in this systemnum_hidden_node: number of hidden nodes in a hidden LSTM layerdropout: dropout for input data (The float number between 0 and 1)batch_size: size of input batch for training this system.patience: number used for early stopping in training stage

Note: In the first time of running vie-ner-lstm, this system will automatically download word embeddings for Vietnamese from the internet. (It may take a long time because a size of this embedding set is about 1 GB). If the system cannot automatically download this embedding set, you can manually download it from here (vector, word) and put it into embedding directory.

@inproceedings{Pham:2017,

title={The Importance of Automatic Syntactic Features in Vietnamese Named Entity Recognition},

author={Thai-Hoang Pham and Phuong Le-Hong},

booktitle={Proceedings of the 31th Pacific Asia Conference on Language, Information and Computation},

year={2017},

}

Thai-Hoang Pham < phamthaihoang.hn@gmail.com >

Alt Inc, Hanoi, Vietnam