Everything we hear or see, make the most of the daily experiences throughout our life. Remember the feeling of seeing your parents after a long day at school, or that burst of emotions on meeting your partner immediately after a painful surgery. Faces and incidents play a vital role in shaping our relations with other people, gradually detaching us from our usual instincts. With the ongoing pandemic, the use of video calling and social media platforms has grown significantly, and has leveraged our dependence of technology, completely ignoring the down-sides of this audio-visual revolution. All of this, increases the likelihood of transforming media into ones liking and thereby causing psychological manipulation of the society as a whole.

The trends for doctored videos and images made by expert media content creators, created a whole new horizon of possibilities for Machine Learning enthusiasts and IT professionals, resulting in the evolution of Deepfakes.

Since the very beginning of multimedia, people have always tried to fake genuine information for fulfilling deceptive motives. But deepfakes leverage powerful techniques from machine learning and artificial intelligence to manipulate or generate visual and audio content with a high potential to deceive general audience. The initial applications were intended to transform pipelines for filmmakers and 3D artists and effectively cut down on editing time. The increasing level of similarity of alterations to the original content in terms of quality and feel of authenticity, is what makes this technology intuitive and horrifying at the same time.

The biggest dilemma that arises due to this is, Can we ever trust the faces we see? The post we see everyday, the pictures, the video messages, the interviews, with faces of our know and trusted people, are they feeding us with hoax? Is media even real? Deductions like these eventually ignite intellects to recognize the fractures in this mechanism.

Like most Machine Learning algorithms, Deepfakes deploy multiple layers of Convolutional Neural Networks, usually training into an Autoencoders or a Generative Adversarial Network (GAN) to leverage effective feature selection from training data of target faces with video & images. Then it repeats the process to achieve the best possible accuracy with validation data to semantically reproduce eye-pleasing facial content with altered characteristics stored as a model. This ML model can be integrated with many high-end technologies or embedded into commercial Software with adequate computational power.

The current research on identifying a deepfake relies mainly on two approaches. The first one, which is extensively used by many leading Digital Forensic Experts, is by understanding the patterns of human body language, facial expressions, mouth movement, and other Non-Verbal indications, along with figuring out the weak spots that aren’t properly rendered like a generic video. This technique currently detects around 96% of the deepfakes successfully. But as the accuracy of ML is increasing, the surety of this technique’s predictions is quite uncertain.

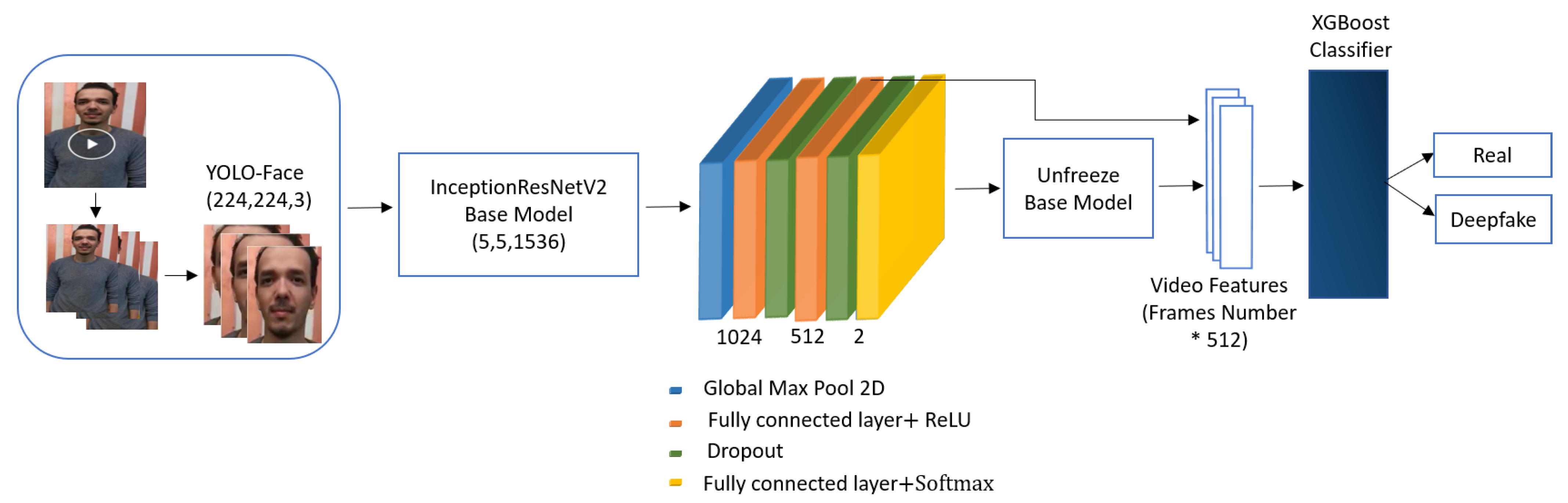

On the other hand, researchers in big tech firms like Google, Facebook and freelance enthusiast on platforms like Kaggle and Drivendata are working on their own machine learning perspectives to device a counteracting ML Model by reverse-engineering the results from public datasets. As the research community looks to build upon these innovations, we should all think more broadly and consider solutions going beyond analyzing images and video. Considering provenance, context and other cues may be the mode to enhance deepfake detection models.

Many other technologies are on the verge of production level efficiency, but not every content consumer like you and me can have access to these. And sometimes these videos might be so perfect that its physically impossible to distinguish. Its just a matter of time for the perfect deepfake to arrive.

What we need to do is to trust the source of media generation, from where it is propagated and use common sense to understand that what you see is even plausible to you. One of the major cause of spread of misleading information, is the fact that they force us to think and react with almost no delay. We all need to take a second before finalizing our preconceptions and keep our eyes and ears open for anyone trying to invasively shape our believes.