This repository is an advanced implementation of AI agent techniques, focusing on:

- Multi-Agent Orchestration for coordinating multiple agents in AI workflows.

- Retrieval-Augmented Generation (RAG) framework to improve AI-generated responses.

- AI Agent Techniques such as Planning (ReAct flow), Reflection, etc. for enhanced reasoning.

- Multi-Agent Orchestrator

- Introduction to RAG

- Advanced RAG Techniques

- Other AI Technologies

- Running Backend Only as API

- Running the Project with Docker

- Project Structure

- Contributing

- License

- References

This project enhances LLM capabilities using multi-agent workflows, integrating:

- ReAct for planning and execution.

- Reflection for iterative learning.

- Multi-Agent Coordination for complex problem-solving.

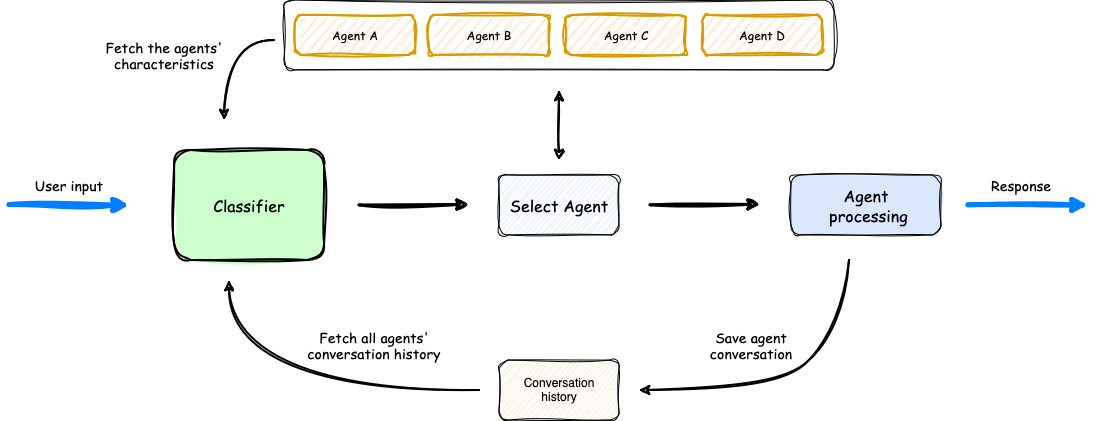

- User input is classified to determine the appropriate agent.

- The orchestrator selects the best agent based on historical context and agent capabilities.

- The selected agent processes the input and generates a response.

- The orchestrator updates conversation history and returns the response.

For further exploration:

Large Language Models (LLMs) have limitations in handling private or recent data. The Retrieval-Augmented Generation (RAG) framework mitigates this by retrieving relevant external documents before generating responses.

- Indexing: Splits documents into chunks, creates embeddings, and stores them in a vector database.

- Retriever: Finds the most relevant documents based on the user query.

- Augment: Combines retrieved documents with the query for context.

- Generate: Uses the LLM to generate accurate responses.

This repository supports several advanced RAG techniques:

| Technique | Tools | Description |

|---|---|---|

| Naive RAG | LlamaIndex, Qdrant, Google Gemini | Basic retrieval-based response generation. |

| Hybrid RAG | LlamaIndex, Qdrant, Google Gemini | Combines vector search with BM25 for better results. |

| Hyde RAG | LlamaIndex, Qdrant, Google Gemini | Uses hypothetical document embeddings to improve retrieval accuracy. |

| RAG Fusion | LlamaIndex, LangSmith, Qdrant, Google Gemini | Generates sub-queries, ranks results using Reciprocal Rank Fusion. |

| Contextual RAG | LlamaIndex, Qdrant, Google Gemini, Anthropic | Compresses retrieved documents to keep only the most relevant details. |

| Unstructured RAG | LlamaIndex, Qdrant, FAISS, Google Gemini, Unstructured | Handles text, tables, and images for diverse content retrieval. |

- 🤖 Supports Claude 3, GPT-4, Gemini. For optimal performance: Use the Gemini family of models.

- 🧠 Advanced AI planning and reasoning capabilities

- 🔍 Contextual keyword extraction for focused research

- 🌐 Seamless web browsing and information gathering

- 💻 Code writing in multiple programming languages

- 📊 Dynamic agent state tracking and visualization

- 💬 Natural language interaction via chat interface

- 📂 Project-based organization and management

- 🔌 Extensible architecture for adding new features and integrations

To run the backend separately, follow the instructions in the backend README.

git clone https://github.com/buithanhdam/maowrag-unlimited-ai-agent.git

cd maowrag-unlimited-ai-agentcp ./frontend/.env.example ./frontend/.env

cp ./backend/.env.example ./backend/.envand fill values:

# For backend .env

GOOGLE_API_KEY=<your_google_api_key>

OPENAI_API_KEY=<your_openai_api_key>

ANTHROPIC_API_KEY=<your_anthropic_api_key>

BACKEND_API_URL=http://localhost:8000

QDRANT_URL=http://localhost:6333

MYSQL_USER=your_mysql_user

MYSQL_PASSWORD=your_mysql_password

MYSQL_HOST=your_mysql_host

MYSQL_PORT=your_mysql_port

MYSQL_DB=your_mysql_db

MYSQL_ROOT_PASSWORD=root_password

AWS_ACCESS_KEY_ID=

AWS_SECRET_ACCESS_KEY=

AWS_REGION_NAME=

AWS_STORAGE_TYPE=

AWS_ENDPOINT_URL=

# For frontend .env

NEXT_PUBLIC_BACKEND_API_URL=http://localhost:8001

docker-compose up --builddocker exec -it your-container-name bash

mysql -u root -p- Enter

root password(configured in.envordocker-compose.yml).

Run SQL queries:

CREATE USER 'user'@'%' IDENTIFIED BY '1';

GRANT ALL PRIVILEGES ON maowrag.* TO 'user'@'%';

FLUSH PRIVILEGES;

CREATE DATABASE maowrag;- Frontend:

http://localhost:3000 - Backend:

http://localhost:8000 - Qdrant: Ports

6333,6334 - MySQL: Port

3306

docker-compose down📦 maowrag-unlimited-ai-agent

├── backend/ # Backend source code

│ ├── Dockerfile.backend

│ ├── requirements.txt

├── frontend/ # Frontend source code

│ ├── Dockerfile.frontend

│ ├── next.config.js

├── docker-compose.yml # Docker Compose setup

├── Jenkinsfile # CI/CD configuration

Contributions are welcome! Please submit an issue or a pull request to improve this project.

This project is licensed under the MIT License.