-

Notifications

You must be signed in to change notification settings - Fork 14

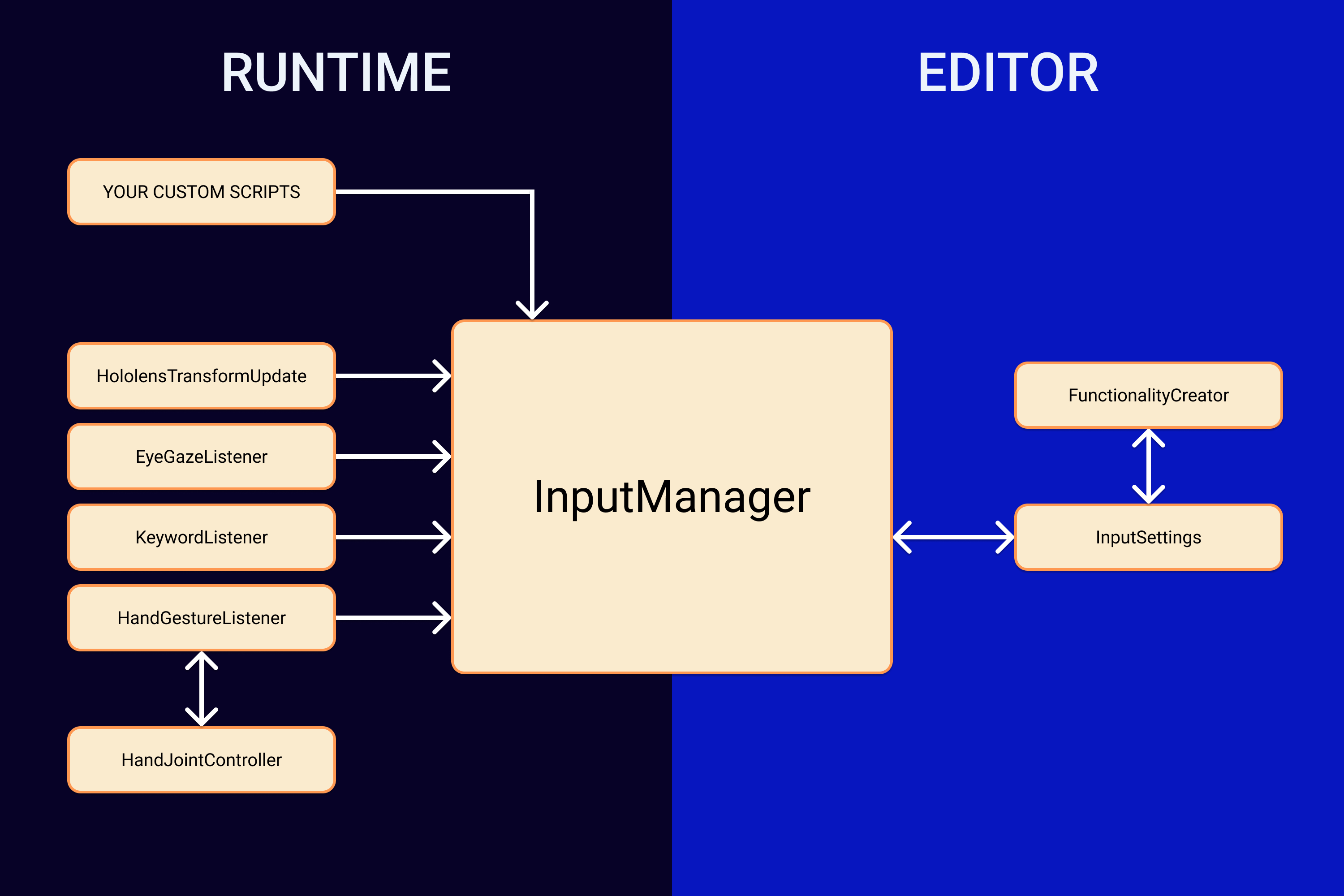

Architecture

Bouvet Development Kit has an architecture separated editor and runtime. In the editor, the user toggles features in the InputSettings script and the Functionality creator adds and removes the relevant game objects to the scene. When the application starts, the InputManager class sets up the different input methods. During runtime these input methods call events in Input Manager when their call-parameters are met. The user listens to these events in Input Manager.

There are several the Structs and Helper classes as well. See SpatialMappingObserver to see how BDK deals with spatial mapping.

InputManager is the main class of the application and is the access point for the developer to get access to the different input methods and attach their own classes to the action listeners of BDK.

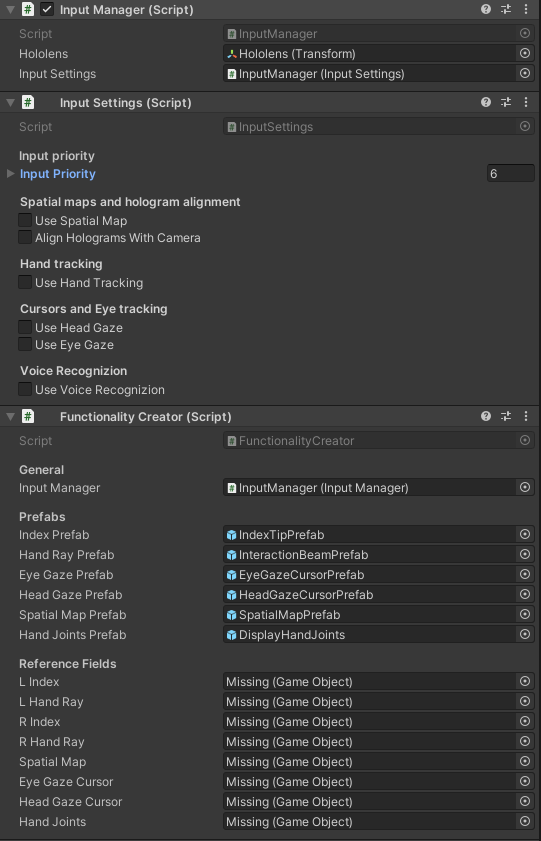

InputManager has a reference to InputSettings which can be changed in the Inspector and the user can toggle which input methods should be active:

Based on the input the developer inputs, InputManager will set up the different input methods: Hand tracking, voice recognition and eye tracking.

Based on the settings in InputSettings, InputManager can set up hand tracking. Here is a short description of the different classes set up:

- HandGestureListener: This class calls many of the event functions for dealing with hand tracking input. It also sets up HandJointController.

- HandJointController: This class updates each individual joints in both hands each frame.

If hand tracking is enabled in InputSettings these three classes will trigger the following events from InputManager if their requirements are met:

- OnSourceFound

- OnSourceLost

- OnInputUp

- OnInputUpdated

- OnInputDown

- OnManipulationStarted

- OnManipulationUpdated

- OnManipulationEnded

- OnProximityStarted

- OnProximityUpdated

- OnProximityEnded

Each of these events contains an InputSource as part of their parameters.

Based on the settings in InputSettings, InputManager can set up voice recognition. Here is a short description of the class set up:

- KeywordListener: This class calls the event functions for dealing with voice input. It holds a ConcurrentDictionary that holds the key phrases and their connected action. It listens for voice input and responds in kind.

If voice recognition is enabled and a command is recognized, the KeywordListener will invoke the corresponding action and trigger the following event:

This event contains an InputSource as part of its parameters.

Based on the settings in InputSettings can set up eye tracking. Here is a short description of the different classes set up:

- EyeGazeListener: This class calls the event functions for dealing with eye tracking input.

If eye tracking is enabled and the eyes are properly calibrated, the EyeGazeListener will invoke the corresponding actions and trigger the following event:

Each of these events contains an InputSource as part of their parameters.

Based on the settings in InputSettings, head tracking functionality will be enabled. If head tracking is enabled, a script called HololensTransformUpdate is added to the HoloLens gameobject. This script will call the following event in InputManager:

This event contains an InputSource as part of its parameters.