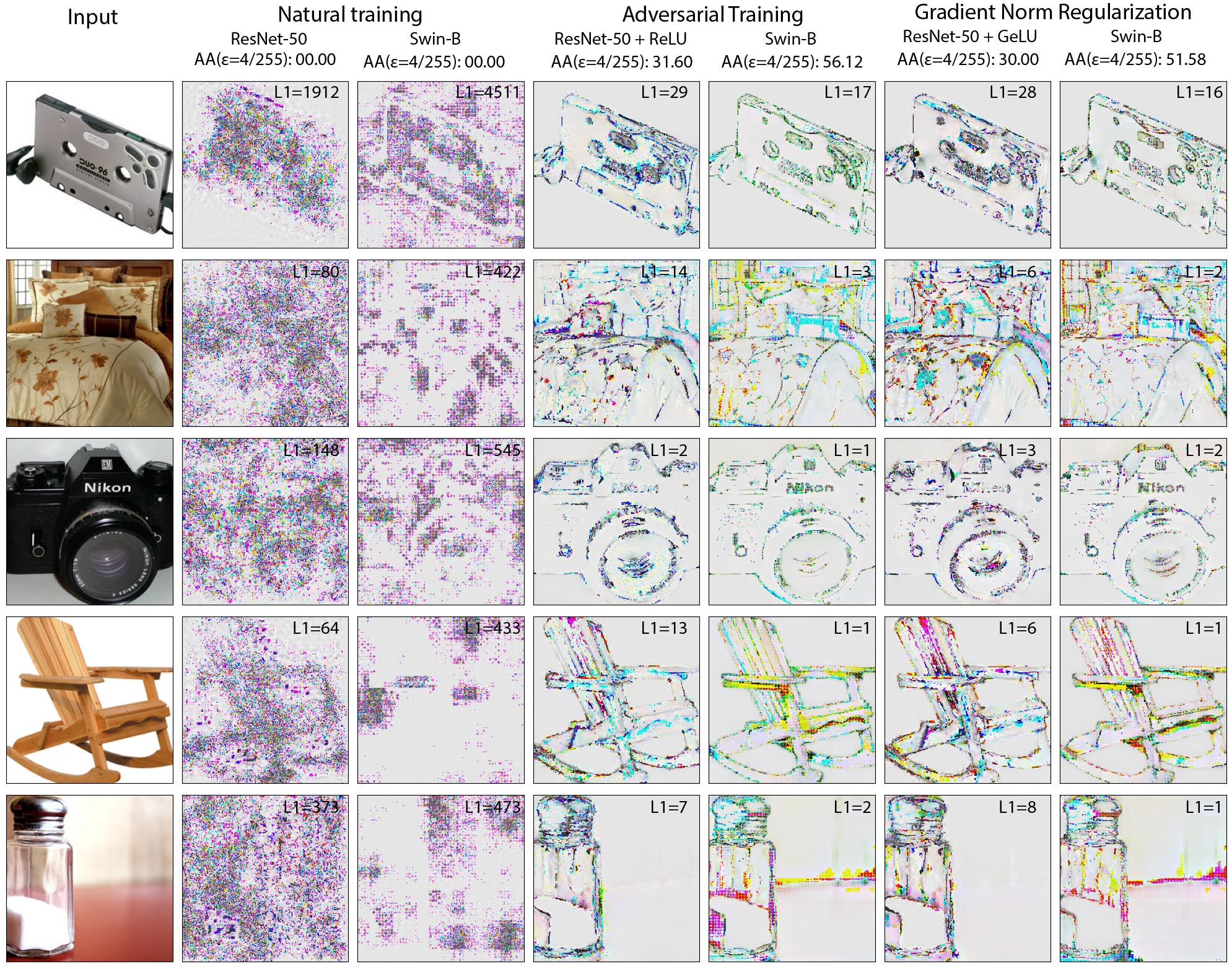

This repository contains the code for the paper "Characterizing Robustness via Natural Input Gradients".

[Project page] [Paper]

Set up the conda environment as follows:

conda create -n RIG python=3.9 -y

conda activate RIG

conda install pytorch torchvision torchaudio pytorch-cuda=12.4 -c pytorch -c nvidia

pip install timm==1.0.9 pyyaml==6.0.2 scipy==1.13.1 gdown==5.2.0 pandas==2.2.3

If using pip, simply install

pip install timm==1.0.9 pyyaml==6.0.2 scipy==1.13.1 gdown==5.2.0 pandas==2.2.3

For the models referenced in the paper, they can be downloaded from the following links

| Model | Clean acc. | AutoAttack (standard, |

|---|---|---|

| GradNorm - SwinB | 77.78 | 51.58 |

| EdgeReg - SwinB | 76.80 | 35.02 |

| GradNorm - ResNet50+GeLU | 60.34 | 30.00 |

The ImageNet dataset is needed, which can be downloaded from https://www.image-net.org

The main training code borrows the vast majority of the content from ARES-Bench with minor code and training recipe modifications. Our models can be reproduced by running run_train.sh. Note that the train_dir and eval_dir ImageNet locations in the config files (./configs_cifar, ./configs_finetuning, ./configs_liu2023, ./configs_logit, ./configs_train) will need to be changed to yours.

The evaluation is exactly the same as ARES-Bench for consistency. Our results can be reproduced by running run_eval.sh. Simply replace YOUR_MODEL_PATH and YOUR_IMAGENET_VAL_PATH for your own values.

@inproceedings{rodriguezmunoz2024characterizing,

title={Characterizing model robustness via natural input gradients},

author={Adrián Rodríguez-Muñoz and Tongzhou Wang and Antonio Torralba},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2024},

url={}

}